Earlier VTO techniques, such as geometric warping, could adapt clothing images to a silhouette, but the results often fell short of a natural look. Therefore, the focus was on generating every pixel of a garment from scratch to produce high-quality, realistic images, an aim achieved by the novel diffusion-based AI model, Google said in a press release.

Diffusion, in the above context, refers to the process of gradually adding extra pixels to an image until it becomes unrecognisable, then subsequently removing this noise until the original image is perfectly reconstructed. Text-to-image models such as Imagen employ diffusion in conjunction with text from a large language model (LLM) to generate images solely based on text.

However, this new approach to VTO takes a slightly different angle. The diffusion process involves a pair of images as input—one of the garment and another of a person. Each image is processed through its own neural network and shares information in a process termed ‘cross-attention’ to generate the final photorealistic image of the person donning the garment.

Training of the AI model used Google’s Shopping Graph, which boasts the world’s most comprehensive data set of the latest products, sellers, brands, reviews, and inventory. The model was trained using numerous pairs of images, each showcasing a person wearing a garment in two different poses.

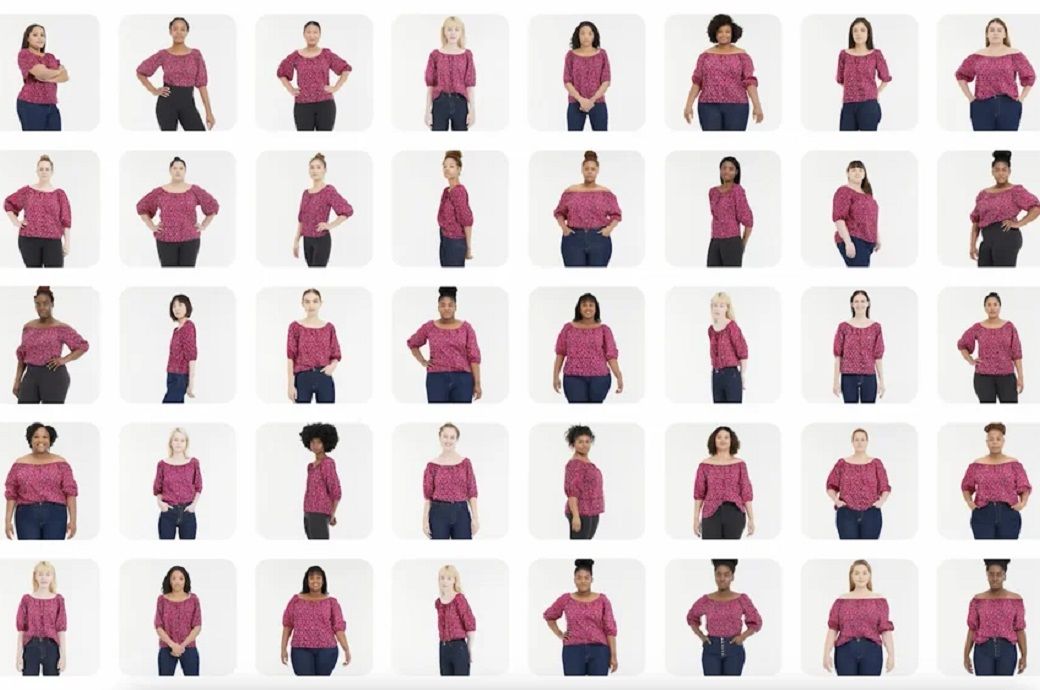

The process was then repeated using millions of random image pairs featuring various garments and people, culminating in a tool that enables users to realistically visualise how a top looks on a model of their choice.

The VTO feature is now available for women’s tops from brands including Anthropologie, LOFT, H&M, and Everlane across Google’s Shopping Graph. As the technology evolves, the tool will become even more precise and extend to additional brands.

Fibre2Fashion News Desk (NB)